This blog will explain how to archive financial documents via object FI_DOCUMNT. Generic technical setup must have been executed already, and is explained in this blog.

Object FI_DOCUMNT

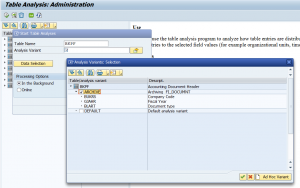

Go to transaction SARA and select object FI_DOCUMNT.

Dependency schedule:

No dependencies.

Main tables that are archived:

- BKPF (accounting document header)

- BSAD (accounting document secondary index for customers)

- BSAK (accounting document secondary index for vendors)

- BSAS (accounting document secondary index for GL accounts cleared items)

- BSEG / RFBLG (cluster for accounting document)

Technical programs and OSS notes

Write program: FI_DOCUMNT_WRI

Delete program: FI_DOCUMNT_DEL

Post processing program: FI_DOCUMNT_PST

Read program: read program in SARA refers to FAGL_ACCOUNT_ITEMS_GL. This program you can use with online data and archive files as data source

Reloading of FI_DOCUMNT was supported in the past, but not any more: 2072407 – FI_DOCUMNT: Reloading of archived data.

Relevant OSS notes:

- 1627937 – Balance does not match with line items – Archiving

- 1661074 – Archiving log error BL252 “Message number 999999 reached. Log is full”

- 1711034 – FI Archiving FI_DOCUMNT throws Message Withholding tax life (455 days) not reached

- 1794684 – FI Archiving FI_DOCUMNT displays Account Type Life not reached (T071).

- 1953138 – FBL3N always shows archived data

- 2072407 – FI_DOCUMNT: Reloading of archived data –> reloading no longer supported.

- 2147485 – How to check FI documents can be archived in FI_DOCUMNT_WRI

- 2233397 – FI_DOCUMNT_WRI: FI document is not archived

- 2266225 – Message no. FG257 when archiving object FI_DOCUMNT

- 2346296 – Technical details about financial accounting archiving

- 2545209 – Access to FI archived data after upgrading to S/4 Hana

- 2683107 – Archived items still exist in BSAK

- 2775018 – Archived items still exist in BSAK

- 2909406 – FI_DOCUMNT: Error while determining the BOR key is thrown

- 3071653 – FI_DOCUMENT archiving in S/4 HANA

- 3249255 – FI_DOCUMNT – Change in KSL and OSL values of ACDOCA post FI documents Archiving

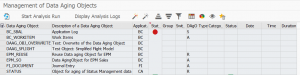

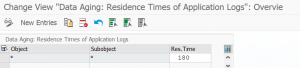

Application specific customizing

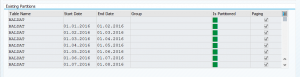

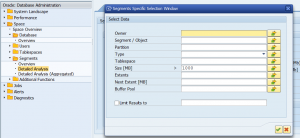

In the application specific customizing for FI_DOCUMNT you can maintain the document retention time settings:

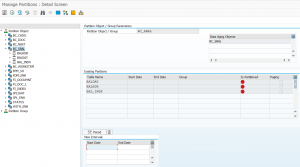

Executing the write run and delete run

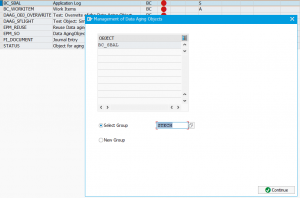

In transaction SARA, FI_DOCUMNT select the write run:

Select your data, save the variant and start the archiving write run.

Give the archive session a good name that describes the company code and year. This is needed for data retrieval later on.

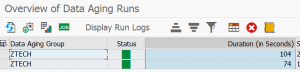

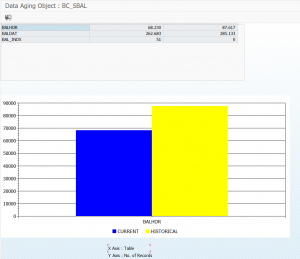

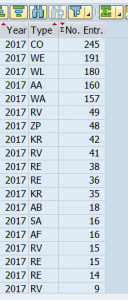

After the write run is done, check the logs. FI_DOCUMNT archiving has average speed, and high percentage of archiving (up to 90 to 99%). Most of the items that cannot be archived is about open items.

For the items that cannot be archived, you can use transaction FB99 to check why. Read the full explanation in this blog.

Deletion run is standard by selecting the archive file and starting the deletion run.

Post processing

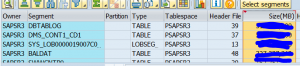

It is important to run the post processing of FI_DOCUMNT. After the archiving BSAK, BSAS and BSIS secondary table records are still present with the field ARCHIV marked with ‘X’. See notes 2683107 – Archived items still exist in BSAK and 2775018 – Archived items still exist in BSAK.

Standard SAP will deal correctly with this field. But custom made reports and the average BI data analyst will not.

So run post processing directly after the deletion run.

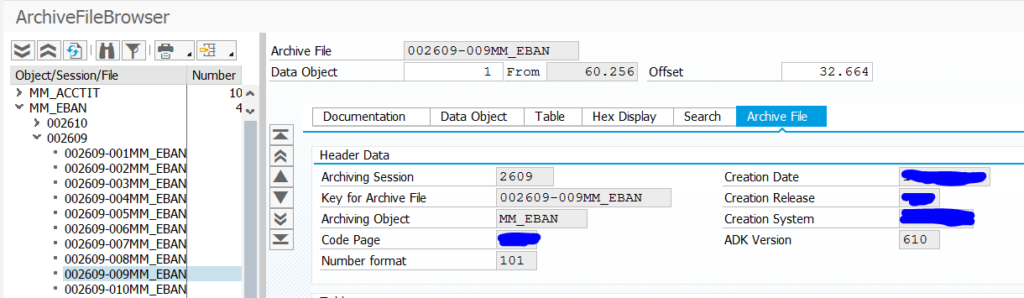

Data retrieval

For FI_DOCUMNT multiple read programs are available. To select them press the F4 search:

Start the program and select the button Data Source, tick the archive as well and select the archive files to read:

Due to data volume the query might run long. Select the archive files with care.

Most finance programs to list data use this Data Source principle.

SARI infostructure issues

If you get issues for sessions which are in error in SARI for infostructure SAP_FI_DOC_002, read the below potential work around.

The reason we see the errors for the archive session when we attempt to refill is that there are a number of documents that already exist in the infostructures, and there cannot be duplicates in the infostructure table.

The most common cause is that the variant for the WRITE program was set so the same document got archived twice into different archive files.

What can be done? If it is OK to have the same document in different files, you can ignore the archive session entries with error in SARI if the case is as above. To avoid having duplicate keys in the infostructure in future, you can add the filename as an extra key field to the infostructure. This can be done as follows:

- SARI -> Customizing -> SAP_FI_DOC_002 -> Display

- Technical data

- Change the field “File Name Processing” from ‘D’ to ‘K’.

This is untested method.

Parallel processing

There is an option to archive Financial documents in parallel processing mode. This is an advanced development option. It uses archiving object FI_DOC_PPA. For more information read OSS note 1779727 – FI_DOCUMNT: Integrating parallel process with archiving.

If your data volume is manageable and you can archive during nights and weekends, keep it simple and use FI_DOCUMNT.