With S4HANA SAP has deprecated some parts of their old code. In some weird cases this old code might still be required.

This blog will explain on the S4HANA blacklist. Questions that will be answered are:

- How do I see a dump is caused by the S4HANA blacklist?

- Where to find more background information on the S4HANA blacklist?

The S4HANA blacklist dump

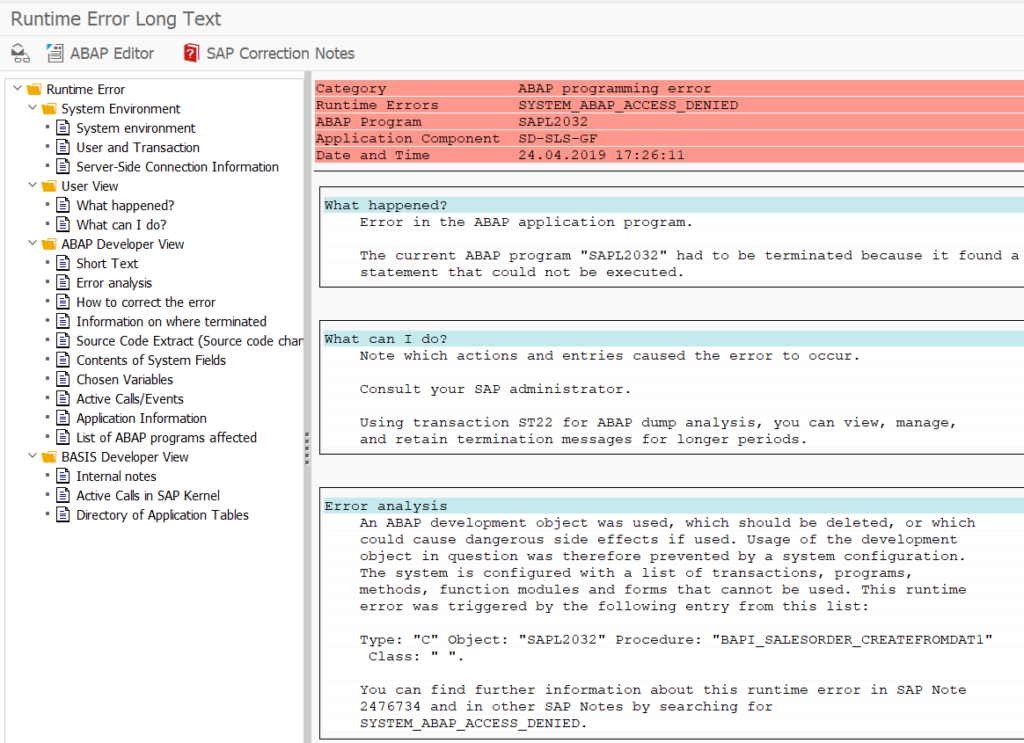

If for whatever reason the S4HANA system gives an ABAP dump with the error SYSTEM_ABAP_ACCESS_DENIED, this is a S4HANA blacklist dump. See note 2476734 – Runtime error SYSTEM_ABAP_ACCESS_DENIED. Or a reference to OSS note 2295840 – Outbound / Inbound calls from external to RFC FM are blocked when the FM is blacklisted and the UCON-Check is active.

Blacklisted RFC calls

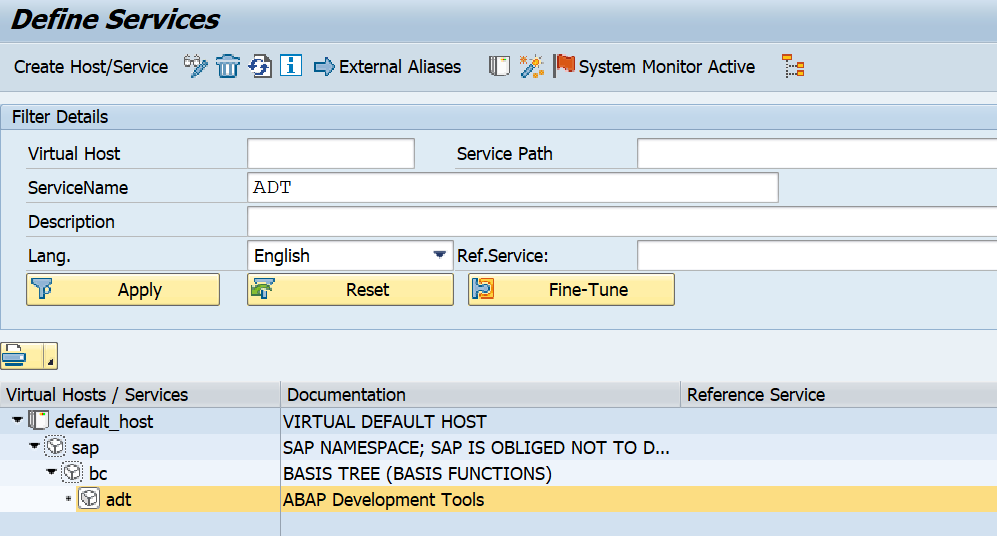

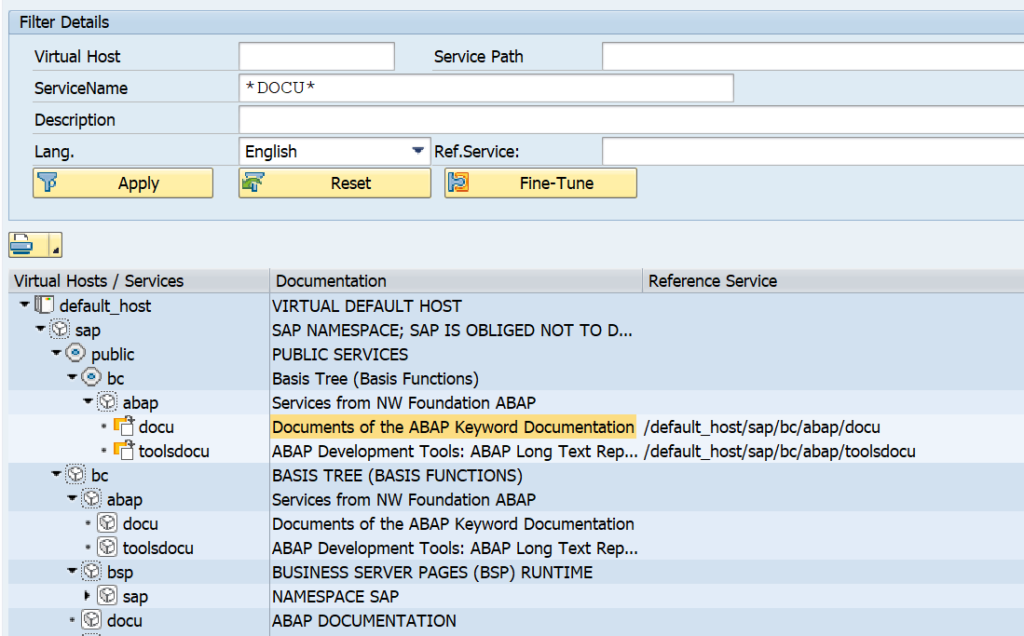

When calling a blacklisted RFC from an external application you can get similar dump with reference to OSS note 2295840 – Outbound / Inbound calls from external to RFC FM are blocked when the FM is blacklisted and the UCON-Check is active. This note itself is old and refers to newer OSS note 2416705 – Outbound / Inbound calls from external to RFC FM are blocked when the FM is blacklisted using Blacklist Object. You can run program RS_RFC_BLACKLIST_COMPLETE to see which function modules are blacklisted:

What to do when you hit a blacklisted item?

The best approach is to avoid doing what you did and look for the functional alternative provided by SAP. Search for the correct simplification item OSS note. In almost all cases SAP provides a solution.

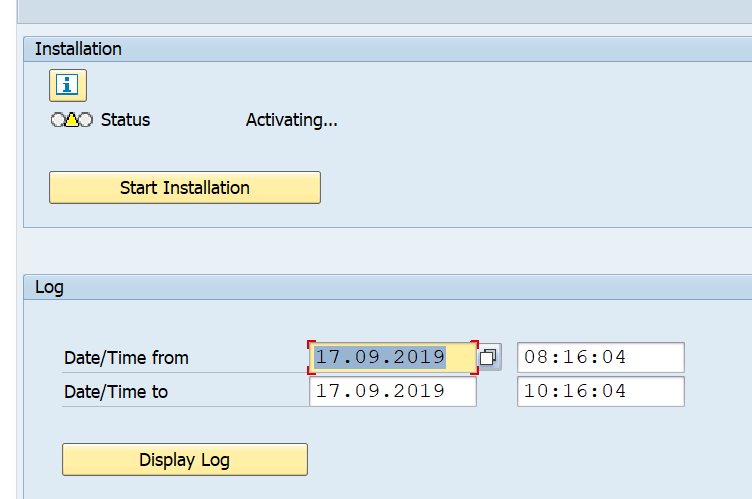

Activating a blacklisted item

OSS note 2249880 – Dump SYSTEM_ABAP_ACCESS_DENIED caused through Blacklist Monitor in SAP S/4HANA on premise, contains the procedure to activate a blacklisted item. For the RFC calls follow the instructions of OSS note 2408693 – Override blacklist of Remote Enabled Function Modules.

Please make sure you have both the clearance from SAP and the system owner in writing before executing this procedure. Support can be lost and system upgrade in the future can be facing severe blocks. Only execute as last resort after explicit approval.